Here is Lin Li (李琳).

I am a postdoctoral fellow in AI Chip Center for Emerging Smart Systems (ACCESS) at the Hong Kong University of Technology and Science (HKUST), advised by Prof. Kwang-Ting (Tim) Cheng. Additionally, I collaborate with Prof. Long Chen at HKUST. Prior to this, I obtained my PhD degree in Computer Science and Technology from Zhejiang University (ZJU), under the supervision of Prof. Jun Xiao.

My research interest includes Multi-modal Large Language Models and Scene Understanding.

If you are interested in any aspect of me, I am always open to discussions and collaborations. Feel free to reach out to me at - lllidy[at]ust.hk

📝 Publications

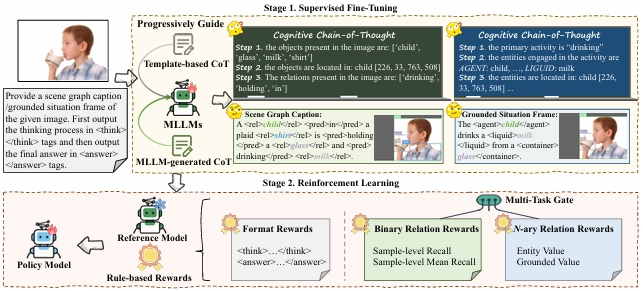

Lin Li*, Wei Chen*, Jiahui Li, Kwang-Ting Cheng, Long Chen

- The first unified relation comprehension framework that explicitly integrates cognitive chain-of-thought (CoT)-guided supervised fine-tuning and group relative policy optimization (GRPO) within a reinforcement learning paradigm.

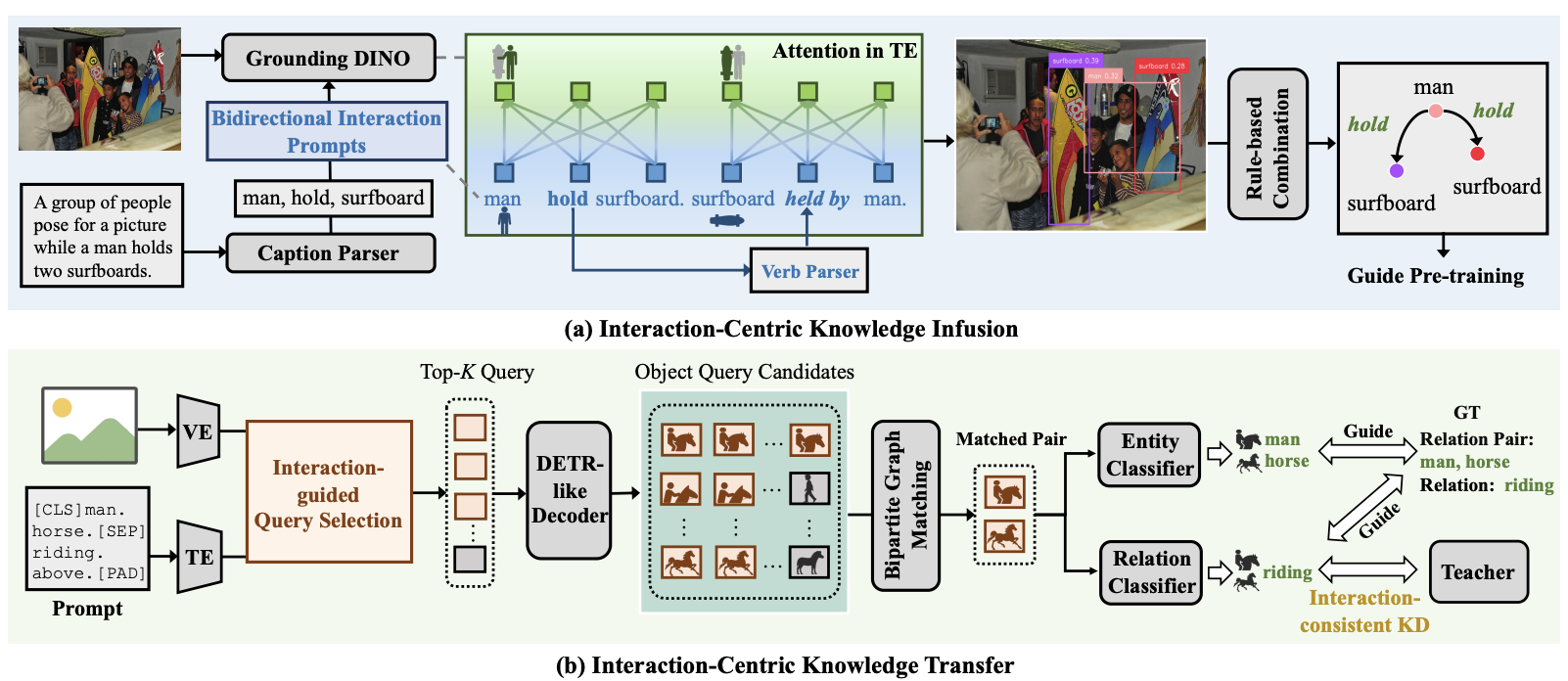

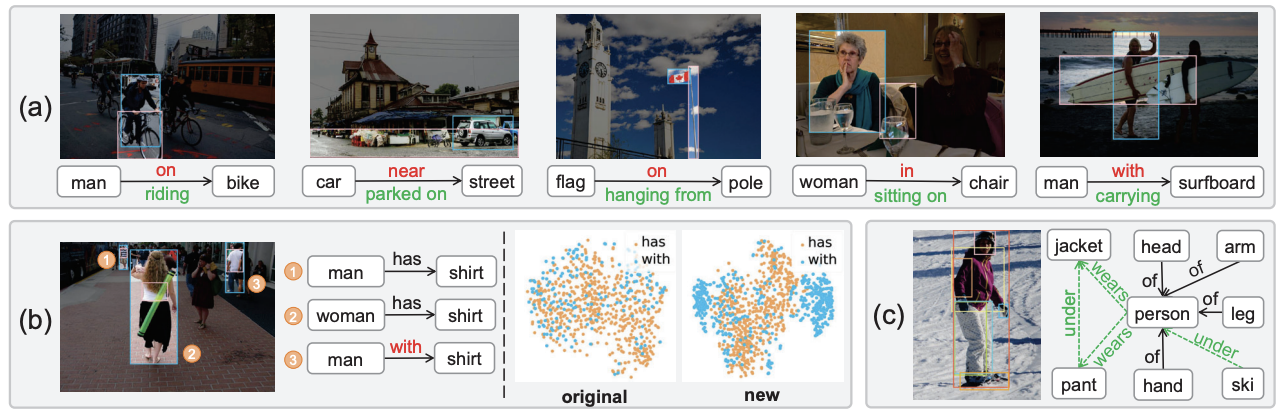

Interaction-centric knowledge infusion and transfer for open-vocabulary scene graph generation

Lin Li, Chuhan Zhang, Dong Zhang, Chong Sun, Chen Li, Long Chen

- An interaction-centric end-to-end OVSGG framework that shifts the paradigm from object-level representations to interaction-driven learning.

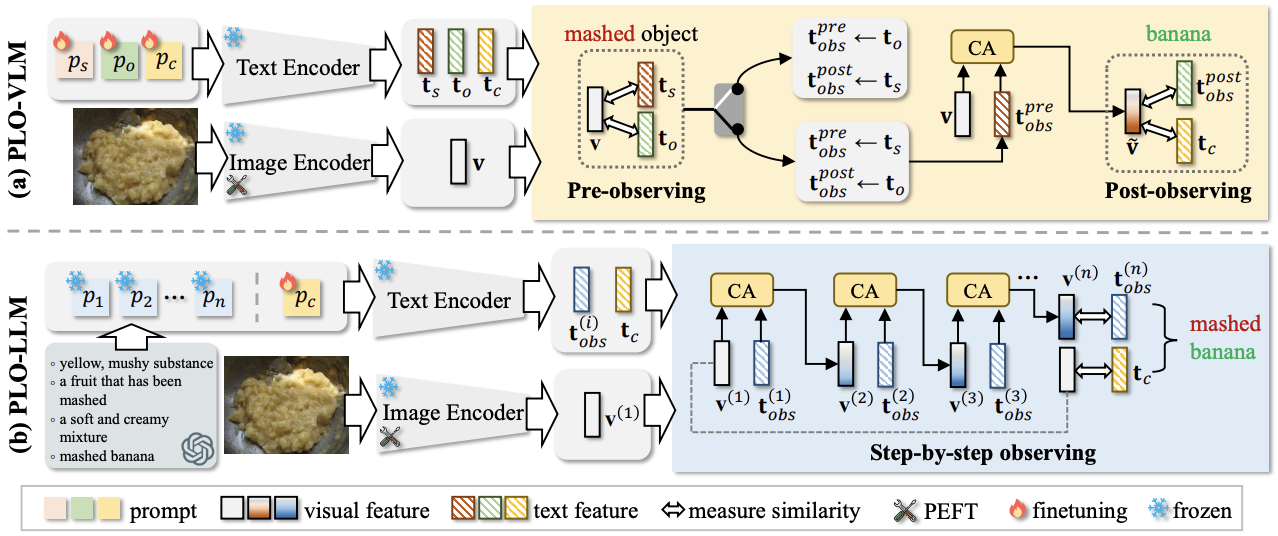

Compositional zero-shot learning via progressive language-based observations

Lin Li, Guikun Chen, Zhen Wang, Jun Xiao, Long Chen

- Automatically allocating the observation order in the form of primitive concepts or graduated descriptions, enabling effective prediction of unseen state-object compositions.

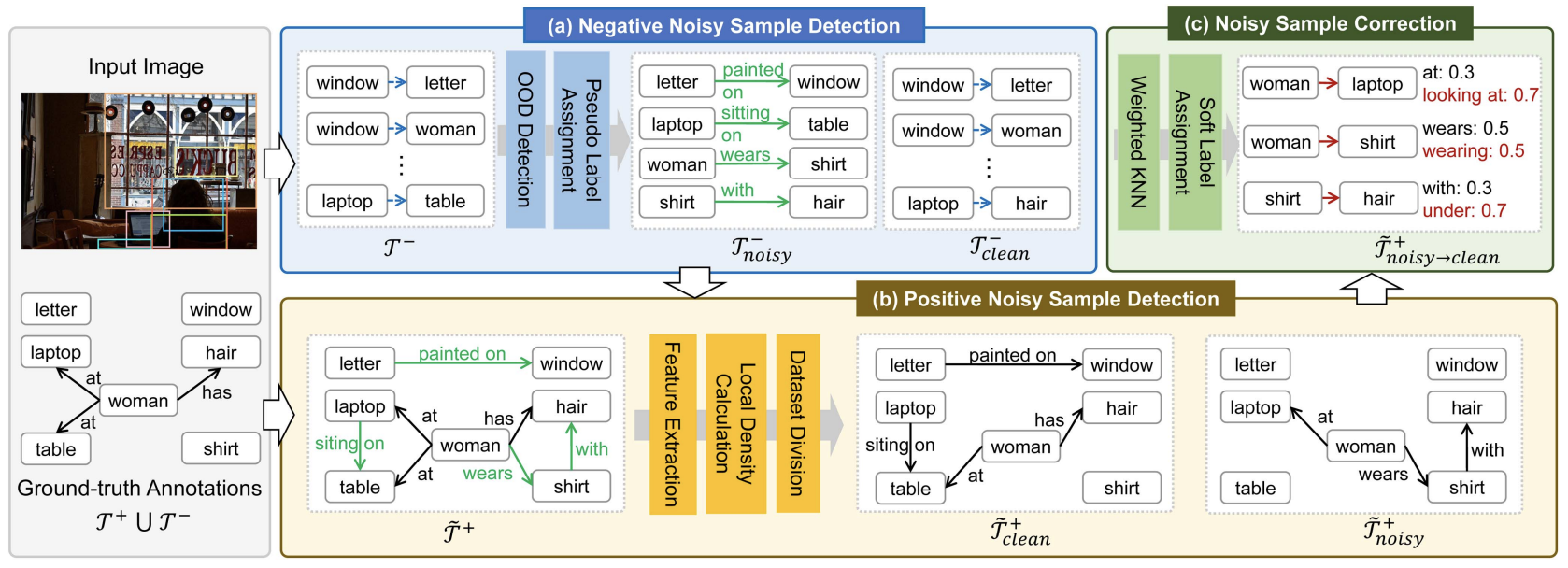

Nicest: Noisy label correction and training for robust scene graph generation

Lin Li, Jun Xiao, Hanrong Shi, Hanwang Zhang, Yi Yang, Wei Liu, Long Chen

- An out-of-distribution scene graph generation dataset VG-OOD and a debiased Knowledge distillation strategy.

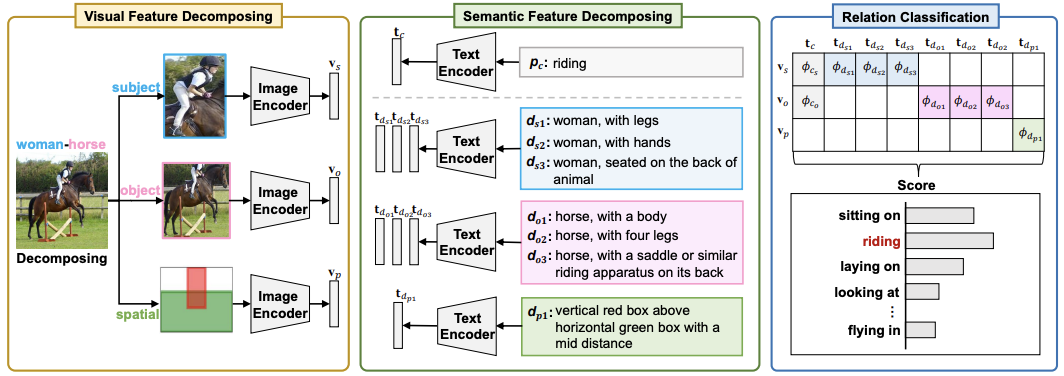

Zero-shot visual relation detection via composite visual cues from large language models

Lin Li, Jun Xiao, Guikun Chen, Jian Shao, Yueting Zhuang, Long Chen

- The first exploration of zero-shot visual relation detection via composite description prompts.

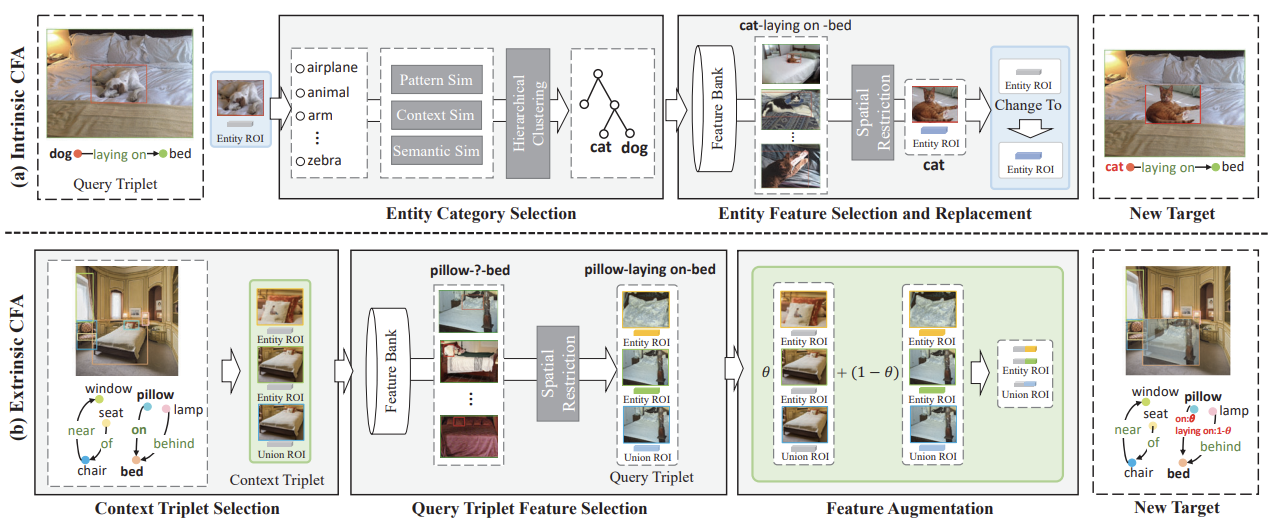

Compositional feature augmentation for unbiased scene graph generation

Lin Li, Guikun Chen, Jun Xiao, Yi Yang, Chunping Wang, Long Chen

- Tackling unbiased scene graph generation from the perspective of increasing the diversity of triplet features.

The devil is in the labels: Noisy label correction for robust scene graph generation

Lin Li, Long Chen, Yifeng Huang, Zhimeng Zhang, Songyang Zhang, Jun Xiao

- Reformulating scene graph generation (SGG) as a noisy label learning problem, and pointing out that the two plausible assumptions are not applicable for SGG.

(†: Corresponding author, *: Equal contribution, ♢: Student first author)

-

EMNLP 2025RED: Unleashing Token-Level Rewards from Holistic Feedback via Reward Redistribution. Jiahui Li, Lin Li, Tai-Wei Chang, Kun Kuang, Long Chen, Jun Zhou, Cheng Yang -

AI Magazine, 2025Recent Advances in Finetuning Multimodal Large Language Models. Zhen Wang*, Lin Li*, Long Chen -

ACM MM 2025Zero-shot compositional action recognition with neural logic constraints. Gefan Ye*, Lin Li*†, Kexin Li*, Jun Xiao, Long Chen -

CVPR 2025 HighlightComm: A coherent interleaved image-text dataset for multimodal understanding and generation. Wei Chen*, Lin Li*, Yongqi Yang*, Bin Wen, Fan Yang, Tingting Gao, Yu Wu, Long Chen -

PR 2025Knowledge integration for grounded situation recognition. Jiaming Lei, Sijing Wu, Lin Li†, Lei Chen, Jun Xiao, Yi Yang, Long Chen -

ACM MM 2024Seeing beyond classes: Zero-shot grounded situation recognition via language explainer. Jiaming Lei, Lin Li†, Chunping Wang, Jun Xiao, Long Chen -

IJCV 2024From easy to hard: Learning curricular shape-aware features for robust panoptic scene graph generation. Hanrong Shi*, Lin Li*†, Jun Xiao, Yueting Zhuang, Long Chen. -

TCSVT 2023Label semantic knowledge distillation for unbiased scene graph generation. Lin Li, Jun Xiao, Hanrong Shi, Wenxiao Wang, Jian Shao, An-An Liu, Yi Yang, Long Chen. -

ICME 2023 OralAddressing predicate overlap in scene graph generation with semantic granularity controller. Guikun Chen*, Lin Li*, Yawei Luo, Jun Xiao -

ESWA 2023Question-guided feature pyramid network for medical visual question answering. Yonglin Yu, Haifeng Li, Hanrong Shi, Lin Li† -

ACM MM 2021 OralInstance-wise or class-wise? a tale of neighbor shapley for concept-based explanation. Jiahui Li, Kun Kuang, Lin Li, Long Chen, Songyang Zhang, Jian Shao, Jun Xiao -

TMM 2020Explore video clip order with self-supervised and curriculum learning for video applications.

Jun Xiao, Lin Li♢, Dejing Xu, Chengjiang Long, Jian Shao, Shifeng Zhang, Shiliang Pu, Yueting Zhuang

🎖 Honors and Awards

- 2025 ACM HZ Chapter Doctoral Dissertation Award.

- 2025 2nd Place in the Data Curation for Vision Language Reasoning (DCVLR) Challenge. NeurIPS 2025.

- 2023.10 Academic Star Training Program for Doctoral Students in Zhejiang University.

- 2022.09 Transfar Group Scholarship at Zhejiang University.

- 2021.09 National Scholarship.

- 2019 1st Place in the ImageCLEF Visual Question Answering (VQA) Challenge 2019.

- 2017.09 National Scholarship.